I understand test-driven development so far that you are only allowed to write productive code when you have a failing (red) unit test.

Based on this I have the question if the test-driven approach can also be applied to other forms of tests.

5

All TDD requires of you is that you write a failing test, then modify your code to make it pass.

Typically “unit tests” are small and fast and test some part of your code in isolation. Because they are fast, it makes the red/green/refactor loop fast too. However, they suffer from only testing parts in isolation. So you need other tests too (integration, acceptance etc). It is still good practice to follow the same principles: write a failing test, then modify the code to make it work. Just be aware that they are typically slower, so can affect the red/green/refactor cycle time.

The red green refactor cycle is built on one very sound principle:

Only trust tests that you have seen both pass and fail.

Yes that works with automated integration tests as well. Also manual tests. Heck, it works on car battery testers. This is how you test the test.

Some think of unit tests as covering the smallest thing that can be tested. Some think of anything that’s fast to test. TDD is more than just the red green refactor cycle but that part has a very specific set of tests: It’s not the tests that you will ideally run once before submitting a collection of changes. It’s the tests that you will run every time you make any change. To me, those are your unit tests.

1

However, I am wondering if the test-driven approach can also be applied to other forms of tests.

Yes, and a well known approach which does this is Behaviour-driven development. The tests which are generated from the formal spec in BDD might be called “unit tests”, but they will typically be not that low-level as in real TDD, they will probably fit better to the term “acceptance tests”.

I understand test-driven development so far that you are only allowed to write productive code when you have a failing (red) unit test.

No. You are only allowed to write the simplest code possible to change the message of the test. It doesn’t say anything about what kind of test.

In fact, you will probably start by writing a failing (red) acceptance test for an acceptance criterion, more precisely, you write the simplest acceptance test that could possibly fail; afterwards you run the test, watch it fail, and verify that it fails for the right reason. Then you write a failing functional test for a slice of functionality of that acceptance criterion, again, you write the simplest functional test that could possibly fail, run it, watch it fail, and verify that it fails for the right reason. Then you write a failing unit test, the simplest unit test that could possibly fail, run it watch it fail, verify that it fails for the right reason.

Now, you write the simplest production code that could possibly change the error message. Run the test again, verify that the error message has changed, that it changed into the right direction, and that the code changed the message for the right reason. (Ideally, the error message should be gone by now, and the test should pass, but more often than not, it is better to take tiny steps changing the message instead of trying to get the test to pass in one go – that’s the reason why developers of test frameworks spend so much effort on their error messages!)

Once you get the unit test to pass, you refactor your production code under the protection of your tests. (Note that at this time, the acceptance test and the functional test are still failing, but that’s okay, since you are only refactoring individual units that are covered by unit tests.)

Now you create the next unit test and repeat the above, until the functional test also passes. Under the protection of the functional test, you can now do refactorings across multiple units.

This middle cycle now repeats until the acceptance test passes, at which point you can now do refactorings across the entire system.

Now, you pick the next acceptance criterion and the outer cycle starts again.

Kent Beck, the “discoverer” of TDD (he doesn’t like the term “inventor”, he says people have been doing this all along, he just gave it a name and wrote a book about it) uses an analogy from photography and calls this “zooming in and out”.

Note: you don’t always need three levels of tests. Maybe, sometimes you need more. More often, you need less. If your pieces of functionality are small, and your functional tests are fast, then you can get by without (or with less unit tests). Often, you only need acceptance tests and unit tests. Or, your acceptance criteria are so fine-grained that your acceptance tests are functional tests.

Kent Beck says that if he has a fast, small, and focused functional test, he will first write the unit tests, let the unit tests drive the code, then delete (some of) the unit tests again that cover the code that is also covered by the fast functional test. Remember: test code is also code that needs to be maintained and refactored, the less there is, the better!

However, I am wondering if the test-driven approach can also be applied to other forms of tests.

You don’t really apply TDD to tests. You apply it to your entire development process. That’s what the “driven” part of Test-Driven-Development means: all your development is driven by tests. The tests not only drive the code you write, they also drive what code to write, which code to write next. They drive your design. They tell you when you are done. They tell you what to work on next. They tell you about design flaws in your code (when tests are hard to write).

Keith Braithwaite has created an exercise he calls TDD As If You Meant It. It consists of a set of rules (based on Uncle Bob Martin’s Three Rules of TDD, but much stricter) that you must strictly follow and that are designed to steer you towards applying TDD more rigorously. It works best with pair programming (so that your pair can make sure you are not breaking the rules) and an instructor.

The rules are:

- Write exactly one new test, the smallest test you can that seems to point in the direction of a solution

- See it fail; compilation failures count as failures

- Make the test from (1) pass by writing the least implementation code you can in the test method.

- Refactor to remove duplication, and otherwise as required to improve the design. Be strict about using these moves:

- you want a new method—wait until refactoring time, then … create new (non-test) methods by doing one of these, and in no other way:

- preferred: do Extract Method on implementation code created as per (3) to create a new method in the test class, or

- if you must: move implementation code as per (3) into an existing implementation method

- you want a new class—wait until refactoring time, then … create non-test classes to provide a destination for a Move Method and for no other reason

- populate implementation classes with methods by doing Move Method, and no other way

These rules are meant for exercising TDD. They are not meant for actually doing TDD in production (although nothing stops you from trying it out). They can feel frustrating because it will sometimes seem as if you make thousands of teeny tiny little steps without making any real progress.

TDD is not at all limited to what the traditional Software Testing community calls “unit testing”. This very common misunderstanding is the result Kent Beck’s unfortunate overloading of the term “unit” when describing his practice of TDD. What he meant by “unit test” was a test that runs in isolation. It is not dependent on other tests. Each test must setup the state it needs and do any cleanup when it is done. It is in this sense that a unit test in the TDD sense is a unit. It is self contained. It can run by itself or it can be run along with any other unit test in any order.

Reference: “Test Driven Development By Example”, by Kent Beck

Kent Beck describes what he means by “unit test” in Chapter 32 – Mastering TDD

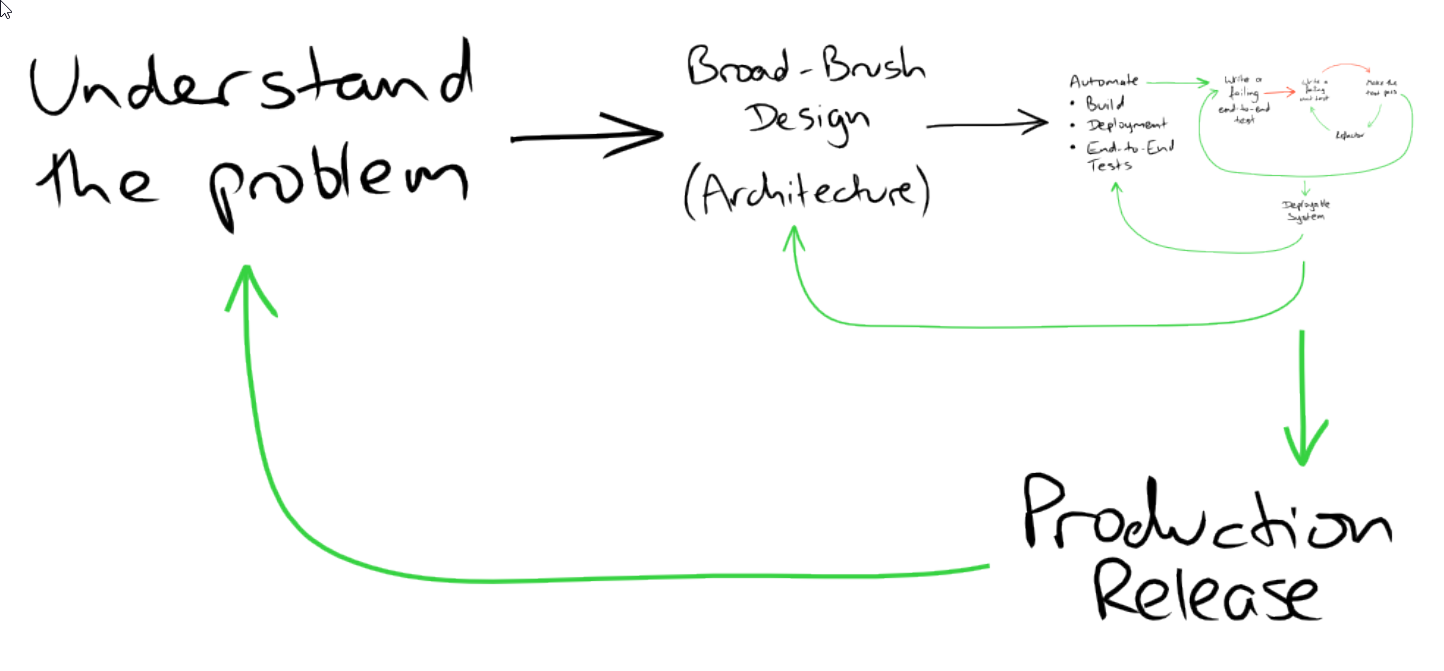

In the talk Test-Driven Development: This is not what we meant Steve Freeman shows the following slide of the TDD big picture (see image below answer). This includes a step “Write a failing end-to-end test” which is followed up by “Write a failing unit-test”. (Click to zoom in, its in the top right)

So no in TDD the tests are not always unit-tests.

And yes you can (and maybe should) start with a higher-level end-to-end test that fails before you write you first unit-test. This test describes the behavior you want to achieve. This generates coverage on more levels of the test-pyramid. Adrian Sutton explains LMAX’s experience which shows that end-to-end tests can play a large and valuable role.

I haven’t read books on it, nor do I completely follow the “standard” TDD practices all the time, but in my mind the main point of the TDD philosophy, which I completely agree with, is that you have to define success first. This is important at every level of design, from “What is the goal of this project?” to “What should the inputs and outputs of this little method be?”

There are a lot of ways to do this definition of success. A useful one, particularly for those low level methods with potentially many edge cases, is to write tests in code. For some levels of abstraction, it can be useful just to write down a quick note about the goal of the module or whatever, or even just mentally check yourself (or ask a co-worker) to make sure everything makes sense and is in a logical place. Sometimes it’s helpful to describe an integration test in code (and of course that helps one to automate it), and sometimes it’s helpful just to define a reasonable quick test plan you can use to make sure all the systems are working together in the way you are expecting.

But regardless of the specific techniques or tools you’re using, in my mind the key thing to take away from the TDD philosophy is that defining success happens first. Otherwise, you’re throwing the dart and then painting the bullseye around wherever it happened to land.

I’ll base this on my personal experience of using TDD over the last 12 years.

TDD is a great coding technique to solve complex problems incrementally, not worrying about what your upfront design / model needs to be.

The idea is to infer your design and focus on the outputs & results of what you are trying to achieve.

It’s far more commonly used at a unit level, because that’s usually where the ‘guts’ of your application logic is going to sit.

It is a technique that can potentially be used at a higher level (smoke test, integration test, end-end test) – But it’s far less common to see that.

For a process of testing to be considered ‘TDD’ – You must have a failing test before any production code is written.

Due to this, it’s indeed rare you will encounter developers writing a full integration test without having any production code beforehand.

So you may decide to write an integration test that hits a controller endpoint with some basic parameters and checks a customer record is saved in the database.

It’s rare that people do that in my experience, but I’ve seen it far more commonly where people write the outside in tests after they already have some semblance of a reachable API. In that case, it’s not TDD.

I’ve seen people within the industry use the term TDD to describe writing any tests around code. That is simply not the case as the main benefit to doing TDD is inferring a design within your code and attempting to reach the leanest, simplest code solution by focusing on the outputs, not the implementation.

No, it can not be applied to other kind of tests, for a simple practical reason: other types of tests are taking too long to execute.

Typical TDD cycle is: write failing test, implement, refactor code. Steps in between are building and execution of tests, and these needs to be lightning fast. If they are not, then people would start skipping steps, and then you are not doing TDD anymore.

2